Contents

Editorial: Optimizing for Change: Why Exuberance is Required

Essays

AI

OpenAI Takes Big Steps Toward Its Long-Planned Reorganization

OpenAI Says Spending to Rise to $115B Through 2029: Information

ElevenLabs CEO/Co-Founder, Mati Staniszewski:The Untold Story of Europe’s Fastest Growing AI Startup

ASML to Invest $1.5 Billion in Mistral, the French A.I. Start-Up

The AI Agent Reality Check: Why Managing Always-On AI Workers Is Your Next Big Challenge

OpenAI Signs $300 Billion Data Center Pact With Tech Giant Oracle

Media

Venture

Education

Interview of the Week

Startup of the Week

Post of the Week

Editorial:

Optimizing for Change. Why Exuberance is Required.

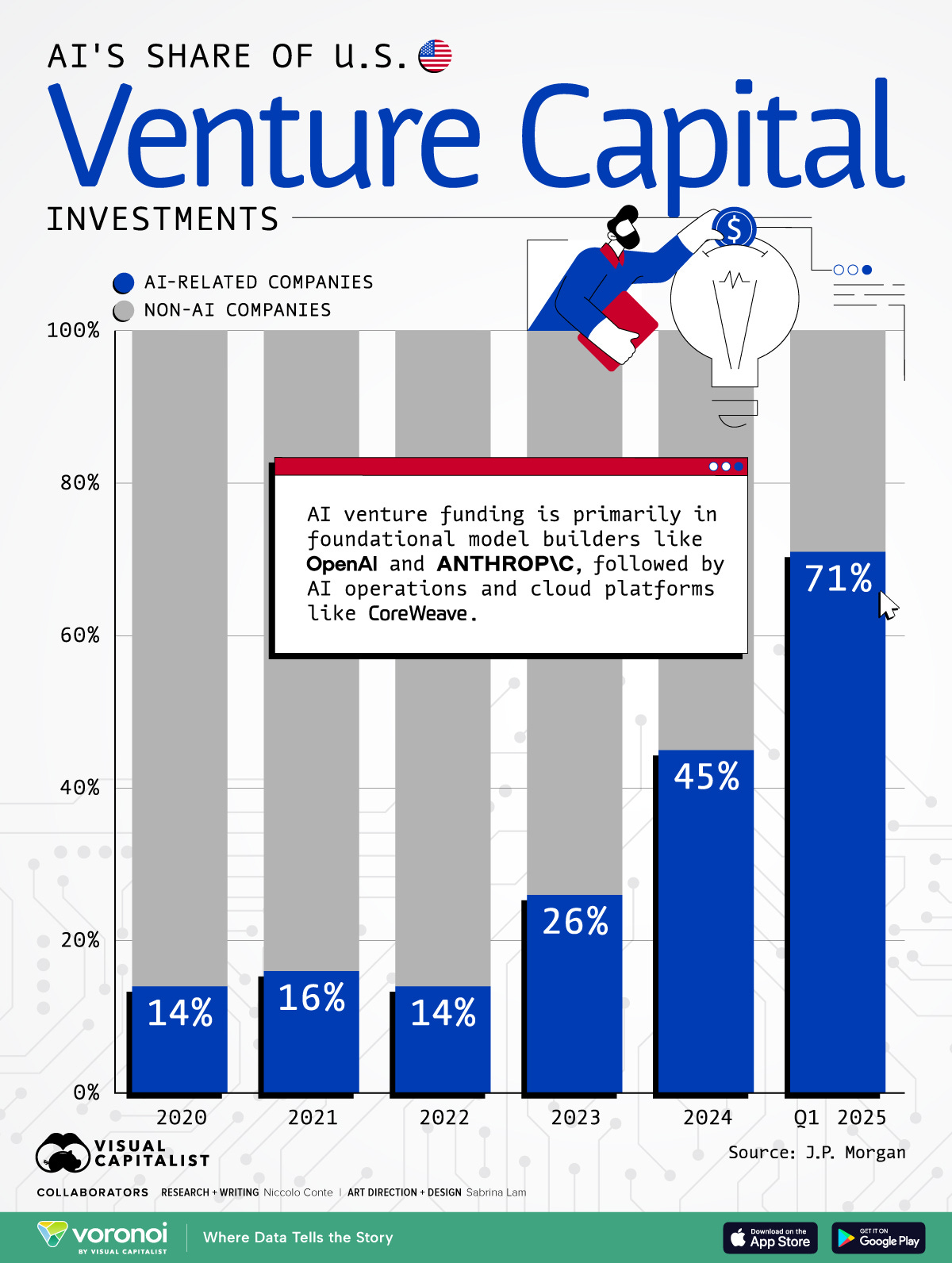

This week there is a lot of focus on the spending associated with AI. OpenAi and Oracle inking a $300 billion deal and ASML in Europe investing well over $1 billlion in Mistral are drivers of the conversation. Exuberance and Bubble are being used a lot in link seeking headlines.

But these are contentless labels ignoring the possibility that what we are seeing is not exuberance but rational investment in a humanity transforming technology. The underlying cause of the negativity is simply disbelief, not intellect. Fear masquerading as thought.

What looks like excess is, in fact, urgency. A $300B OpenAI–Oracle data center pact, $115B of projected OpenAI spend through 2029, and a $100B shift of ownership to OpenAI’s nonprofit aren’t vanity metrics; they’re the scaffolding of a new civic utility. Pair that with ASML’s €1.3–1.5B bet on Mistral and SpaceX’s $17B spectrum deal for direct‑to‑phone connectivity from space, and the signal is unmistakable: we’re industrializing intelligence, not just iterating software.

Investment is not an option, it is a necessity if the human impact of AI is to be realized. The measure of that is in answering the question of AI’s impact on the Gross Domestic Product of the world. Today global GDP is about $108 trillion annually. Most sanguine forecasters suggest the minimum return on investment from AI will be to add $20 trillion to global GDP by 2035. Others go way higher, suggesting a doubling of GDP. AI is already 2-3% of global GDP and we are only in year 2 of real revenue production.

OpenAI and Anthropic are leading hte charge here, and are able to garner large amounts of capital due to the potential outcomes.

Does this mean that the current valuations will survive and grow? There is no way to know, because valuations are driven by future looking models and current sentiment about them. But will value be created far above the investments we are seeing, absolutely yes.

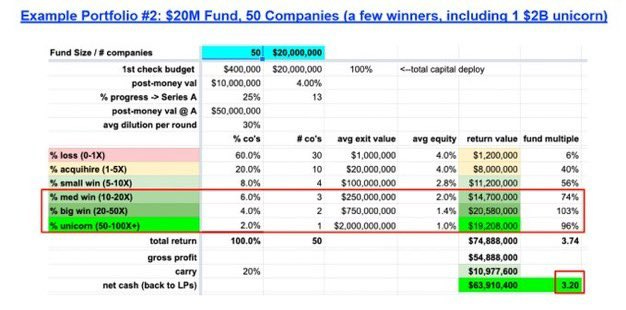

Jon Callaghan of True Ventures staets this plainly in his essay about Capex:

Instead of thinking “this time is different”, I’d rather assume that “this time things will be approximately the same” in terms of how we estimate the scale of value to be created, especially the relationship of early capex spending to application layer buildout and value.

The waves of innovation in these past 3 decades have “rhymed” (or to update this analogy, “looped”) in terms of certain patterns, one of which I think of as the “Capex Multiplier.” Web 1.0, Web 2.0, Cloud/SaaS, and Mobile have all shown a consistent pattern of early capex foundation investment followed by the creation of tremendous value over time at the application layer. How much value? About 5x-10x the initial infrastructure investment.

In the case of AI the multiplier is likely to be far higher because of the prize, global access to human knowledge and the ability to act on it, delivered for almost free to most humans.

Again Jon:

We’re squarely in the AI capex buildout now, with most estimates converging around $2 trillion in AI infrastructure spending over this five- year period. Just last week, Jensen upped his prediction of AI capex to $3 trillion to $4 trillion over the next 5 years, and McKinsey & Co puts the number at $7 trillion by 2030.

Applying a 5-7x Capex Multiplier to these numbers yields a staggering approximation of $15 trillion to $30 trillion in net new value to be created at the application layer over the next couple of decades, or less. (Some waves have been faster than others).

This week’s essay about Yamanaka Factors is a good case study in potential. By studying proteins scientists have reversed aging in mice. Rebuilt decaying cells. Extended life. The belief is that this will be usable with humans. The economic consequences are unmeasurable.

Another case, SpaceX buying Echostar’s space delivered spectrum so it can deliver global high bandwidth internet to the entire planet. A $17 billion bet for a far higher payout.

Finally Apple’s new Air Pods Pro 3 - delivering real time translation between speakers of 2 different languages, leveraging models that run on an iPhone, and the noise cancellation feature of the iPods. This is the Star Trek ‘universal translator’ but hundreds of years early.

What does this mean? Investment and spending numbers have to be measured against outcomes. There is no bubble or exuberance if the outcomes dwarf the costs. Tming? Well it can take decades of course, and there can be ups and downs along the journey. But with AI I would bet on a far shorter payback due to the speed of innovation and the breadth of applications.

Just like the Air Pods Pro 3, AI is going to be built into everything and will improve human life. Is there any price too high to pay for the gains that will produce?

Our stance is unambiguous: lean in. The week’s stories show the costs are high because the prize is human—faster care (biotech and Yamanaka‑era rejuvenation pipelines), wider opportunity (creators, investors, students), stronger institutions (augmentation over automation). Exuberance is justified when paired with clear guardrails and measurable outcomes. Build the capacity. Codify the rights. Then let the work compound.

Essay

🚨 Elon Musk: "The actions of the West are indistinguishable from suicide."

Youtube • All-In Podcast • September 9, 2025

GeoPolitics•USA•Elon Musk•Essay•Western Civilization

Core message

A brief clip captures Elon Musk declaring that “the actions of the West are indistinguishable from suicide,” a stark warning about the direction of Western societies. Delivered in the conversational, rapid-fire format typical of short-form video, the line functions as a thesis: that a cluster of prevailing decisions and cultural reflexes, when aggregated, could undermine the West’s long-run vitality and security. The tone is urgent and polemical, designed to provoke reflection on whether current trajectories in policy and culture align with long-term survival and competitiveness.

Context and framing

The short isolates a single, memorable line rather than a detailed argument, inviting viewers to infer the backdrop: longstanding debates about Western economic competitiveness, institutional effectiveness, social cohesion, and strategic clarity. The framing suggests Musk views current choices not as discrete missteps but as interconnected patterns with compounding effects. The clip’s brevity and emphatic phrasing emphasize salience over nuance, a strategy well-suited to social distribution and shareability.

Key themes signaled

Civilizational resilience: The “suicide” metaphor implies systemic self-harm—decisions that degrade capacity to innovate, defend interests, or maintain social confidence.

Policy inertia vs. dynamism: The remark hints at frustration with bureaucratic drag and risk-averse governance that may slow technological progress or industrial renewal.

Strategic coherence: It gestures toward the need for consistent priorities across energy, defense, technology, and economic policy to avoid cross-purpose actions.

Cultural feedback loops: The statement suggests cultural norms can either reinforce or erode ambition, excellence, and demographic or economic vitality, depending on how they reward behaviors and allocate status.

Implications and stakes

If taken seriously, the line challenges leaders and institutions to stress-test whether current choices are additive or erosive to Western strength over decades, not just news cycles. The metaphor underscores compounding risk: fragmented policies and symbolic gestures can aggregate into meaningful declines in productivity, deterrence, or social trust. It also implies that course correction is possible: diagnosing “suicidal” patterns is a call for agency—re-aligning incentives, accelerating constructive innovation, and reducing self-imposed constraints that do not materially advance safety or prosperity.

Why this resonates in short form

Virality through paradox: “Indistinguishable from suicide” pairs moral gravity with rhetorical compression, making it memorable and debate-sparking.

Audience activation: Viewers project their own policy concerns—energy, industry, migration, defense, education, regulation—onto the line, broadening relevance.

Agenda-setting: By elevating the frame from single-issue complaints to a civilizational lens, the clip encourages integrative thinking across domains typically siloed in public debate.

Considerations and counterpoints

While the clip’s strength is urgency, its weakness is underspecification: without enumerated policies or metrics, the claim risks overgeneralization. Critics might argue the West retains unmatched assets—rule of law, deep capital markets, top research universities, and alliances—that complicate any “suicide” narrative. Supporters could reply that precisely because the West starts from strength, complacency is the greater danger; the line is intended to jolt stakeholders into safeguarding advantages before they erode.

Key takeaways

The clip elevates a civilizational risk frame, arguing that current Western choices collectively threaten long-term strength.

It prioritizes urgency and memorability over specificity, functioning as a prompt for broader debate rather than a policy brief.

The remark implies a call to action: re-align policy, culture, and incentives with durable competitiveness, innovation, and social cohesion.

Palantir CEO Alex Karp on Why Modern Progressivism is Broken

Youtube • All-In Podcast • September 10, 2025

Media•Broadcasting•Palantir•AlexKarp•Progressivism

Overview

Palantir CEO Alex Karp argues that modern progressivism is “broken,” framing his critique around outcomes versus intentions. Speaking in a compact exchange, he contrasts moral rhetoric with practical governance, suggesting that a fixation on signaling virtue has crowded out competence, security, and the ability to solve concrete problems. He positions himself as results-first, drawing from Palantir’s work with national security and government agencies to claim that real-world constraints, not ideological purity, should drive policy and technology choices. The tone is combative but pragmatic: Karp says the measure of any political project is whether it keeps citizens safe, preserves freedoms, and delivers services efficiently.

Core Arguments

Progressivism’s focus on performative ideals: Karp contends that contemporary progressive politics prioritizes language, posturing, and symbolic wins over measurable societal improvements.

Competence over slogans: He maintains that critical domains—public safety, border integrity, and defense readiness—require operational excellence and hard trade-offs rather than aspirational narratives.

Technology as a governance lever: Referencing his vantage point in enterprise software, Karp implies that modern states must adopt high-grade data systems and AI responsibly to make timely, accountable decisions, especially in security contexts.

On Security, Freedom, and Responsibility

Karp links safety to liberal values, arguing that civil liberties become fragile when governments can’t maintain order or deter threats. He suggests that a functioning liberal society needs both robust security apparatuses and strong guardrails for privacy and civil rights. The failure, in his telling, is not progressivism’s goals—fairness, inclusion, justice—but its inability to build institutions and tools that actually deliver them. He urges a more “adult” politics that accepts trade-offs, sets priorities, and measures results, especially where technology and policy intersect.

Implications for Policy and Tech

Procurement and delivery: Karp implies that modernizing government technology stacks—and rewarding what works—should outrank ideological loyalty in hiring and contracting.

AI governance: He gestures toward a middle path that welcomes AI for defense, safety, and service delivery while imposing transparent accountability to protect civil liberties.

Social contract: A credible progressive (or any) politics, he argues, must reduce crime, secure borders, and improve everyday public services; otherwise, voters will defect to alternatives promising order and competence.

Key Takeaways

Outcomes matter more than moral messaging; voters evaluate safety, efficiency, and prosperity, not slogans.

Governments need operational competence and modern software to meet contemporary threats and social needs.

A sustainable liberal order balances security with rights; neglecting either undermines both.

Technologists and policymakers should collaborate on measurable goals, rigorous evaluation, and transparent oversight to regain public trust.

Yamanaka Factors

Matthewharris • Matthew Harris • September 6, 2025

Essay•Education•Yamanaka Factors•Partial Reprogramming•Regenerative Medicine

Well, in mice, they're using these Yamanaka factors to make the mice age the equivalent of like 250 years now. It's really incredible. And there are human clinical trial starting. So the Yamanaka factors, you guys will recall, are the four proteins that were identified that basically can turn any cell back into a stem cell.

Later, there was research done where they took those four Yamanaka factors and they applied a low dose of them to a cell. And rather than have the cell turn all the way back into a stem cell, that cell effectively became young again.

It started to repair and heal itself, repair its DNA, repair its gene expression networks, and the cell returned back to its original state. So the equivalent to think about this in a body is now you've got skin that loses its wrinkles, eye cells that start to see better, brain that starts to work better, muscles that start to work better, and so that is rejuvenation.

What are Yamanaka Factors?

You may already know the headline idea. Four genes named Oct4, Sox2, Klf4, and c Myc can push an old cell back toward a youthful state. Scientists discovered this in 2006, then showed it in human cells in 2007. Turning these genes on fully wipes gene identity and creates stem cells.

The translational move is different. You turn a subset on, and only for short pulses, so the cell keeps its identity, yet regains youthful programs. That is called partial reprogramming.

Yamanaka factors are named after Shinya Yamanaka, who discovered them in 2006 in his lab in Kyoto. The four specific transcription factors he discovered are, Oct3/4, Sox2, Klf4, and c-Myc.

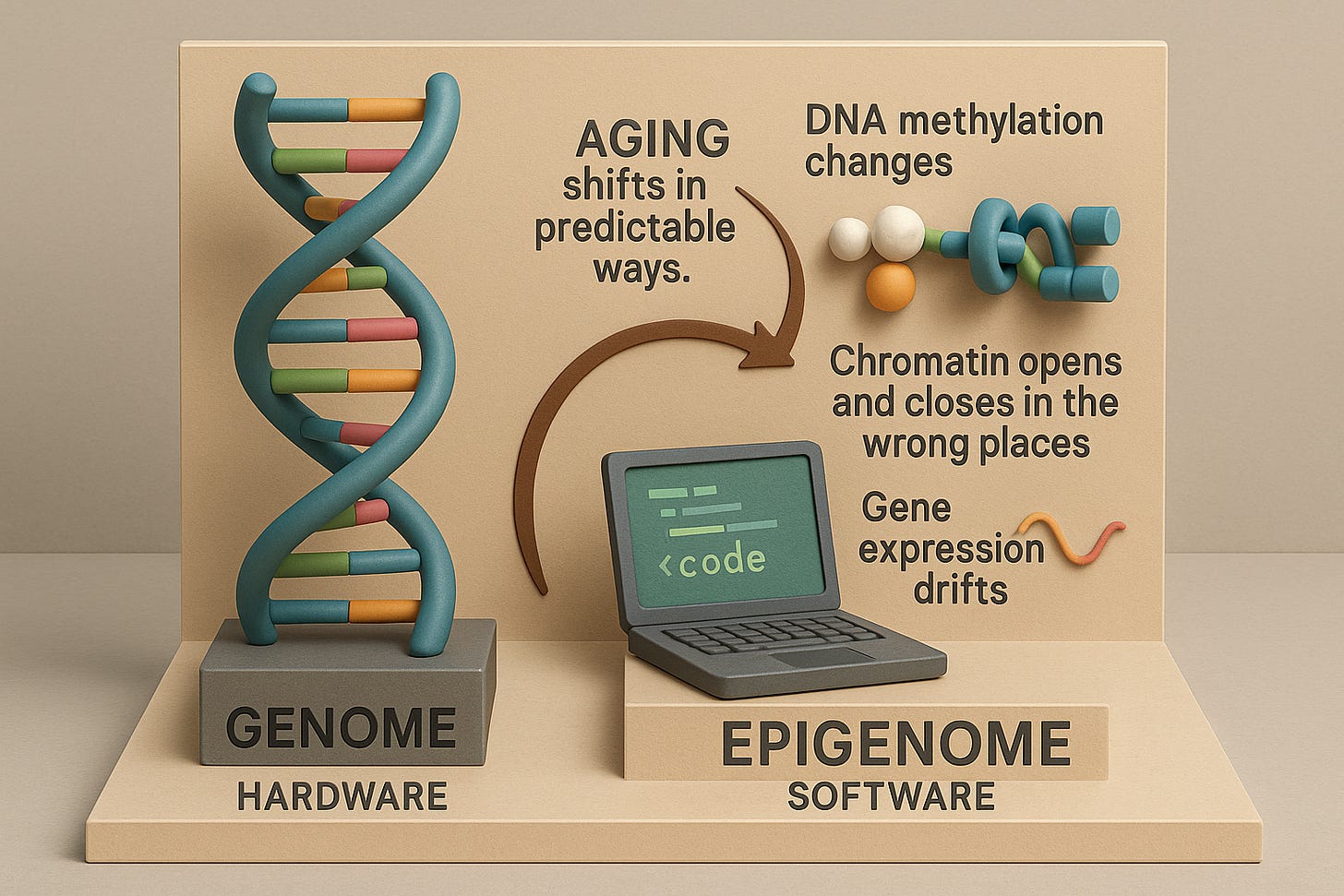

Fast primer for context

Think of the genome as hardware, and the epigenome as software that tells the hardware what to run. Aging shifts that software in predictable ways. DNA methylation changes, chromatin opens and closes in the wrong places, and gene expression drifts.

Yamanaka factors act like a software terminal. Used carefully, they do not replace the hardware, they debug damaged blocks of code toward a more youthful pattern so the code runs the way it used to.

The 'data moats' fallacy

Platforms • September 7, 2025

Essay•AI•Data Moats•NetflixVsBlockbuster•Fulfilment Architecture

Netflix vs. Blockbuster is one of those well-worn stories that suggest quick and obvious explanations - all of which successfully miss the point.

The first misconception is that Netflix’s streaming beat Blockbuster’s DVD rental. But Netflix was way ahead way before streaming entered the picture.

If you rewind the tape a little further, another generic explanation is Netflix’s better customer experience - no more late fees, no more humiliation at the checkout counter when you returned “Titanic” three days late.

The trouble is, Blockbuster did adapt. Once Netflix’s no-late-fee policy started luring away customers, Blockbuster eliminated late fees too. When Netflix’s DVD-by-mail model gained steam, Blockbuster copied that as well, complete with its own red envelopes. On the surface, it matched Netflix feature for feature. Yet Blockbuster still collapsed.

Finally, the last bastion of defence: Netflix had better data, which helped it personalize recommendations for its customers.

But it’s yet another case of true, but utterly useless.

The real reason - fulfilment architecture

In a 2020 post, Amazon is a logistics beast, I explain what really helped Netflix stand out:

The one thing that Blockbuster could never compete with was the integration of demand-side queuing data (users would add movies that they wanted to watch next into a queue) with a national-scale logistics system. All this queueing data aggregated at a national scale informed Netflix on upcoming demand for DVDs across the country.

Blockbuster could only serve users based on DVD inventory available at a local store. This resulted in:

1) low availability of some titles ( local demand > local supply), and

2) low utilization of other titles (local supply > local demand).

Netflix, on the other hand, could move DVDs to different parts of the US based on where users were queueing those titles. This resulted in higher availability while also having fewer titles idle at any point.

Queueing data improved stocking and resulted in higher utilization and higher availability. It allowed Netflix to serve local demand using national inventory.

Debunking the ‘data is our moat’ fallacy

The “data is our moat” fallacy is the belief that simply accumulating large amounts of proprietary data automatically creates a durable competitive advantage.

Defensible advantage in data-driven businesses does not come from having data in isolation, but from the data-informed architecture a company builds.

What matters is the architecture that emerges when data is embedded in the system itself. Netflix’s queue created an entirely new operating model. Competitors could mimic features and even acquire similar datasets, but they could not easily rip out and rebuild their architecture without unraveling their existing model.

The puzzle of Netflix versus Blockbuster, in other words, was not about who had the data, but about who used it to reconfigure the logic of the business. Blockbuster never escaped the gravity of its old architecture.

In short, the fallacy is mistaking data as an asset in isolation for data-informed system design. The former is often transient; the latter, when well-architected, can produce durable advantage.

AI as Normal Technology, revisited

Platformer • Casey Newton • September 9, 2025

Essay•AI

Overview

The piece revisits a now-influential argument about artificial intelligence as a “normal technology” whose full societal impact unfolds over decades rather than months or years. The authors behind a widely cited timelines paper reportedly continue to hold that view: enduring transformation requires long diffusion cycles, complementary investments, and institutional adaptation. The core tension the essay probes is whether, despite those long-run dynamics, many of the promised disruptions appear to have already arrived in everyday life.

What the “normal technology” view means

AI is framed as a general-purpose technology whose value compounds through complementary assets: data pipelines, computing infrastructure, redesigned workflows, and a workforce retrained to use new tools.

Major productivity gains follow a J-curve: initial excitement and visible demos, then a valley of process reengineering and policy work, and finally broad-based gains once organizations absorb the technology.

Timelines are thus measured in decades because diffusion, standards, procurement, and governance move far slower than product demos.

Evidence that change feels immediate

Consumer and workplace behaviors are shifting: conversational search and lightweight agents in productivity suites; code generation and data analysis tools lowering barriers for non-specialists; and AI-assisted media creation reshaping editorial and marketing workflows.

In education, the rapid appearance of AI tutors, grading aids, and writing support is reshaping classroom norms, assignment design, and academic integrity conversations.

The visibility of these shifts creates a perception gap: localized, high-impact use cases can give the impression of total transformation even while many sectors remain largely untouched.

Reconciling the paradox

Both can be true: early, concentrated impact and long-run, society-wide transformation. Early adopters and knowledge workers experience step changes; lagging sectors (manufacturing, healthcare delivery, public services) require standards, liability clarity, and integration with legacy systems.

Measurement lags obscure progress. Traditional productivity statistics and firm-level KPIs often miss quality improvements, reduced time-to-draft, or new product varieties until they are scaled across entire organizations.

Diffusion depends on complements: secure data access, audit trails, reliable model behavior, and governance for safety and privacy. Without these, pilots stall before organization-wide rollout.

Education as a proving ground

Rapid policy oscillation—from bans to structured adoption—illustrates the institutional work needed to harness AI while preserving learning goals.

The emerging consensus emphasizes: teaching AI literacy; shifting assessment toward process, oral defense, and applied projects; and integrating tools transparently rather than policing impossible boundaries.

Equity questions loom: access to capable devices, paid models, and high-quality guidance may widen achievement gaps unless institutions provision shared resources and training.

Risks and guardrails

Hallucinations, bias, and privacy leakage remain operational risks; mitigation requires model choice, retrieval-augmented workflows, and clear human-in-the-loop checkpoints.

Over-reliance can deskill students and workers if assignments and tasks are not redesigned to cultivate judgment, critique, and domain understanding.

Governance will likely standardize around provenance, logging, and impact assessments, aligning incentives for safe deployment without stifling experimentation.

Implications for leaders

Treat AI adoption as an organizational change program, not a feature toggle: invest in data readiness, role definitions, and training.

Pilot where benefits are clear and risks are bounded; codify playbooks; then scale. Expect a multi-year path from promising prototypes to firm-wide transformation.

In education and knowledge work, prioritize transparent use, skill-building, and assessment redesign to turn AI into a complement rather than a crutch.

Key takeaways

The “decades-long transformation” thesis emphasizes complements, diffusion, and institutional change.

Fast-moving, high-visibility use cases can coexist with slow, system-wide adoption.

Education shows both the pace of change and the governance work required.

Sustainable impact depends on infrastructure, skills, and policy—not model demos alone.

Does valuation matter any more in the age of AI?

Ft • September 8, 2025

Essay•AI•Valuation

Thesis and Context

The core argument is that in markets reshaped by artificial intelligence, the durability of a company’s revenue growth and profit margins can be more important than whether a stock looks optically “cheap” on traditional metrics. Cheapness (low price-to-earnings, price-to-book, or enterprise-value-to-sales ratios) may signal cyclical or structural headwinds, while durable growth with resilient margins reflects business models that can harness AI-driven efficiencies, network effects, and product differentiation over long horizons. In such an environment, investors prioritize the persistence and quality of cash flows rather than snapshot multiples that may understate a company’s compounding potential.

Why Durability Can Outweigh Cheapness

Durable growth indicates recurring demand, pricing power, and high switching costs—features often amplified by AI-enabled products and data moats.

Sustained profit margins point to defensible competitive advantages, efficient cost structures, and scalability; AI can deepen these through automation, personalization, and improved decisioning.

Traditional value screens can misclassify winners: companies investing heavily in AI or software may appear expensive today but compound at superior rates for longer than typical cycles.

What ‘Durable Growth’ Looks Like in the AI Era

Recurring revenue and embedded products that become “must-have” workflows; AI augments these with continuous learning, making offerings stickier.

Data advantages: proprietary or high-quality data sets improve model performance, reinforcing customer value and lowering churn.

Distribution and ecosystems: platforms with developer and partner networks leverage AI features across multiple products, extending lifetime value.

Operating leverage from AI: customer support, sales, and R&D productivity gains can expand margins even as the business scales.

Implications for Valuation

Higher multiples may be rational if a company’s growth runway and margin resilience are credibly long-dated. The key variable is duration: how many years of above-market growth and elevated returns on invested capital are plausible before mean reversion?

Valuation work shifts from single-period comparables to multi-scenario discounted cash flow thinking that stress-tests growth persistence, margin trajectories, and capital intensity (including AI infrastructure).

Quality of earnings matters: cash conversion, unit economics, and cohort retention provide better signals of durability than headline revenue growth alone.

Signals to Assess Durability vs. Optical Cheapness

Evidence of pricing power (e.g., successful upsells to AI-enhanced tiers) and stable or rising gross margins despite input cost volatility.

Customer metrics—net revenue retention, expansion rates, contract duration—indicating products are becoming more embedded as AI capabilities improve outcomes.

Efficiency metrics—operating margin trends, R&D productivity, and sales efficiency—that reflect AI-driven leverage rather than one-off cost cuts.

Competitive dynamics: barriers created by data, distribution, compliance, and partnerships can make AI capabilities hard to replicate.

Risks and Counterpoints

Capital intensity can rise: training and inference costs, along with infrastructure spending, may compress margins for firms without sufficient scale or pricing power.

Commoditization risk: if AI features become baseline across an industry, differentiation narrows and multiples should compress toward historical norms.

Regulatory and trust constraints: governance, privacy, and model risk management can add costs and slow deployment, tempering margin expansion.

Forecast error: durability narratives can be overextended; when growth normalizes, high-multiple stocks can de-rate sharply.

Investor Playbook

Focus on the triangle of growth, margins, and reinvestment discipline. Favor companies demonstrating expanding addressable markets from AI features, rising free cash flow margins over time, and prudent capital allocation.

Use scenario analysis to test how long superior growth and margins can persist under varying compute costs, competitive responses, and regulatory regimes.

Distinguish between efficiency-led margin gains (sustainable) and temporary cuts (fragile). Look for product-led pricing and customer outcomes as anchors of endurance.

Key Takeaways

In an AI-driven market, the length and resilience of exceptional growth and margins can justify higher valuations.

Traditional cheapness metrics risk missing compounding businesses where AI enhances moats and operating leverage.

The analytical edge lies in assessing durability—customer stickiness, data advantages, pricing power, and scalable efficiency—rather than chasing low multiples.

iPhones 17 and the Sugar Water Trap

Stratechery • Ben Thompson • September 10, 2025

Essay•AI•iPhone

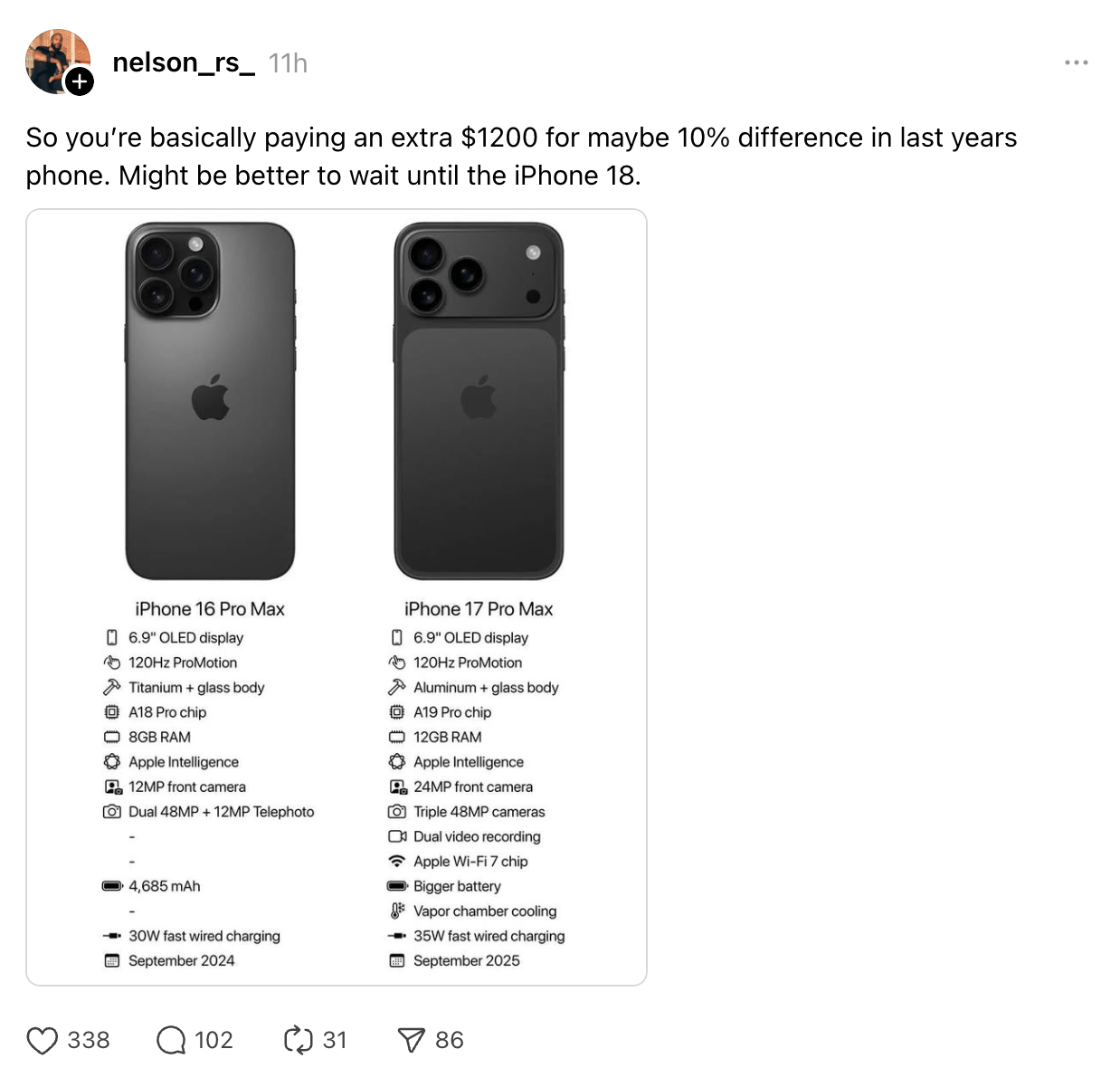

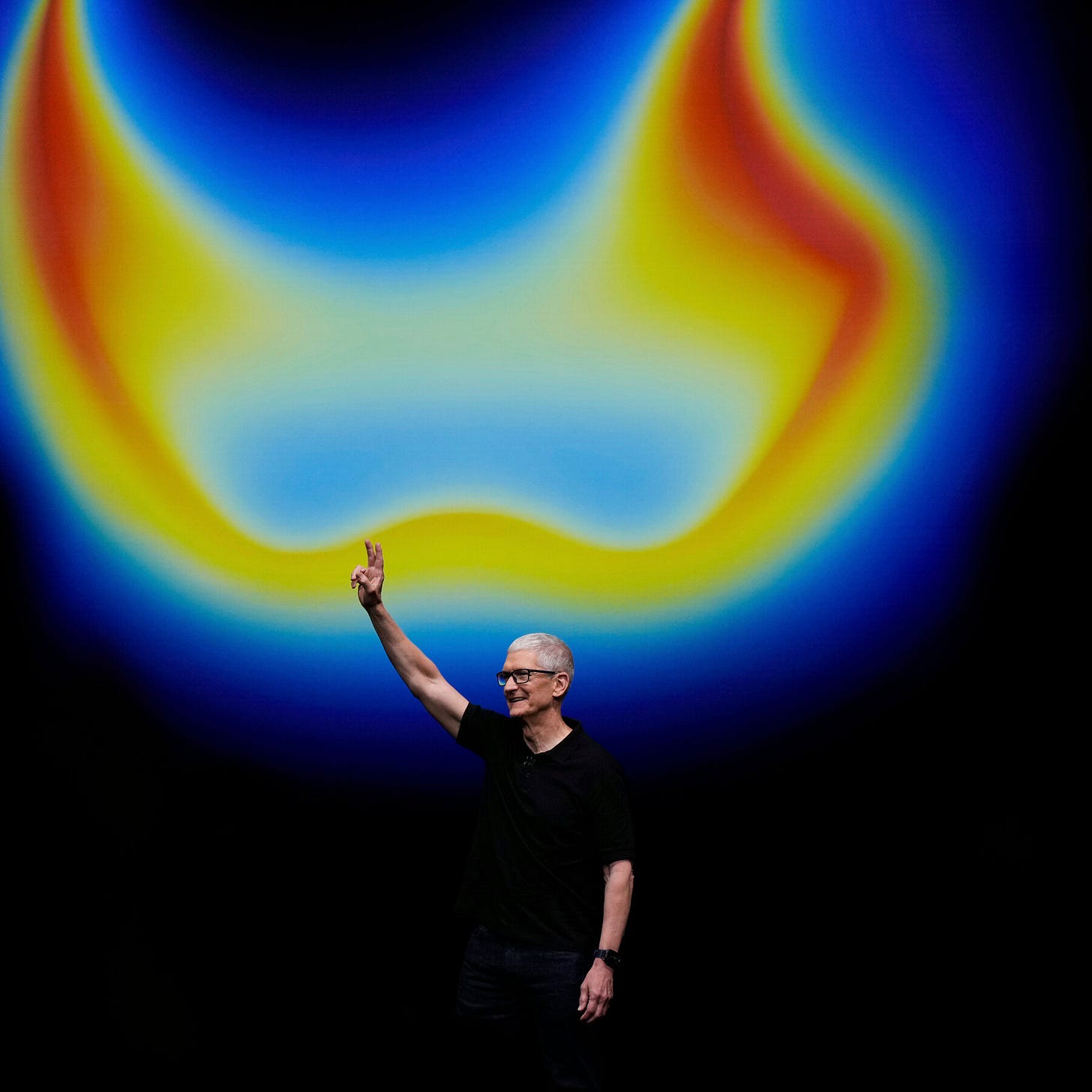

I think the new iPhones are pretty great.

The base iPhone 17 finally gets some key features from the Pro line, including the 120Hz Promotion display (the lack of which stopped me from buying the most beautiful iPhone ever).

The iPhone Air, meanwhile, is a marvel of engineering: transforming the necessary but regretful camera bump into an entire module that houses all of the phone’s compute is Apple at its best, and reminiscent of how the company elevated the necessity of a front camera assembly into the digital island, a genuinely useful user interface component.

Oh, and the Air’s price point — $999, the former starting price for the Pro — finally gave Apple the opening to increase the Pro’s price by $100.

What was weird to me yesterday, however, is that my enthusiasm over Apple’s announcement didn’t seem to be broadly shared. There was lots of moaning and groaning about weight and size (get an iPhone Air!), gripes about the lack of changes year-over-year, and general boredom with the pre-recorded presentation (OK, that’s a fair one, but at least Apple ventured to some other cities instead of endlessly filming in and around San Francisco). This post on Threads captured the sentiment:

This is honestly very confusing to me: the content of the post is totally contradicted by the image! Just look at the features listed:

What to Look for in an Entrepreneur

Davidcummings • David Cummings • September 6, 2025

Essay•Venture

Last week I was catching up with an entrepreneur who was thinking about doing some angel investing, and he asked me what I look for in other entrepreneurs. Over the years, I’ve had the opportunity to work with many great people and learn from their experiences. Entrepreneurship is not a one-size-fits-all journey, but I’ve found some common characteristics that show up again and again in successful entrepreneurs.

1. A Desire to Improve

There are constant ups and downs in entrepreneurship. One moment you’re high-fiving your co-founder after signing a new customer, and the next a key employee leaves and you just want to curl up and cry. The entrepreneurs who succeed have a deep desire to improve. They want to learn, grow, figure out what works and what doesn’t, and take lessons from others who have gone before them. This thirst for learning is a key trait.

2. A Unique View on Risk

Many people see starting a new venture as too risky. Entrepreneurs, on the other hand, often have enthusiasm for calculated risk, especially risks that look uncertain or unlikely to succeed to the average person. They either have a unique angle or an unusually strong belief that they can overcome the challenges. This different perspective on what is and isn’t risky is a defining characteristic.

3. A Chip on the Shoulder

Almost every entrepreneur I’ve worked with has had some compelling drive or unusual background that pushes them to prove themselves in an extreme way. The old saying holds true: chips on shoulders equal chips in pockets. In other words, entrepreneurs with something to prove often end up creating significant value for themselves and their investors.

4. MacGyver-Level Resourcefulness

Resourcefulness is another common trait. The best entrepreneurs love to “MacGyver” their way into opportunities, finagling introductions, connecting seemingly unrelated dots, and figuring out how to make progress when others would stop. Whether it’s landing a first customer, solving a daunting problem, or raising a round of funding after many rejections, this tenacity and creativity greatly increase their chances of success.

5. A Glass-Half-Full Outlook

Finally, successful entrepreneurs tend to believe they can change the world, an industry, or even a city. This optimism, sometimes born from blissful ignorance, helps sustain them when progress is slow, opportunities are scarce, or things are going wrong. A positive outlook creates space for unexpected magic, where unexplainable good outcomes seem to come together at just the right time.

Privacy is a Contract

Searls • September 11, 2025

Essay•Regulation•Privacy•MyTerms•Adtech

SD-BASE is a contract you might proffer that means service delivery only. It makes explicit the tacit understanding we have when we go into a store for the first time: that the store’s service is what you came for, and nothing more. Other terms from a roster of MyTerms choices might allow, for example, anonymous use of personal data for AI training. Or for intentcast signaling*.

In the natural world, privacy is a social contract: a tacit agreement that we respect others’ private spaces. We guard those spaces with the privacy tech we call clothing and shelter. We also signal what’s okay and what’s not using language and gestures. “Manners” are as formal as the social contract for privacy gets, but those manners are a stratum in the bedrock on which we have built civilization for thousands of years.

We don’t have it online. The owner of a store who would never think of planting tracking beacons inside the clothes of visiting customers does exactly that on the company website. Tracking people is business-as-usual online.

The reason we can’t have the same social contract for privacy in the online world as we do in the offline one is that the online world isn’t tacit. It can’t be. Everything here is digital: ones, zeroes, bits, bytes, and program logic. If we want privacy in the online world, we need to make it an explicit requirement.

Policy won’t do it. The GDPR, CCPA, DMA, the ePrivacy Directive, and other regulations are all inconveniences for the $trillion-plus adtech (tracking-based advertising) fecosystem.

“Consent” through cookie notices doesn’t work, because you have no way of knowing if “your choices” are followed. Neither does the website, which has jobbed that work out to OneTrust, Admiral, or some other CMP (consent management platform), which we presume also doesn’t know or much care. Nearly all of those “choices” are also biased toward getting your okay to being tracked.

Polite requests also don’t work. We tried that with Do Not Track, and by the time it finished failing, the adtech lobby had turned it into Tracking Preference Expression. Like we wanted to be tracked after all.

What we need are contracts—ones you proffer and sites and services agree to. Contracts are explicit, which is what we need to make privacy work in the online world. Again, there is no tacit here, beyond the adtech fecosystem’s understanding that every person on the Web is naked, and perfect for exploitation.

This is why we’ve been working for eight years on IEEE P7012 Draft Standard for Machine Readable Personal Privacy Terms, aka MyTerms. With MyTerms, you are the first party, and the site or service is the second party. You present an agreement chosen from a limited roster posted on the public website of a disinterested nonprofit, such as Customer Commons, which was built foro exactly this purpose.

When the other side agrees, you both keep an identical record. (The idea is for Customer Commons to be for privacy contracts what Creative Commons is for copyright licenses.)

AI

OpenAI Takes Big Steps Toward Its Long-Planned Reorganization

Nytimes • Karen Weise and Cade Metz • September 11, 2025

AI•Funding•OpenAI•Microsoft•CorporateGovernance

Overview

OpenAI signaled a sweeping shift in its structure and financing by announcing it has “reached a tentative deal with Microsoft,” its largest backer, and that it intends to transfer “a $100 billion stake” to the nonprofit that oversees the organization. Together, these moves point to a long-anticipated reorganization—one that could rebalance control, clarify mission alignment, and reshape how outside capital interacts with OpenAI’s governance model, which has historically blended a mission-driven nonprofit with a for‑profit operating entity.

What’s New

OpenAI disclosed a tentative agreement with Microsoft, reinforcing a partnership that has underpinned the company’s infrastructure, distribution, and commercialization strategy.

The company said it would give “a $100 billion stake” to the nonprofit that manages it, indicating a major shift of economic rights or control from existing holders toward its governing body.

These steps suggest an effort to consolidate oversight while preserving strategic alignment with a critical investor and technology partner.

Strategic Rationale

The proposed transfer of such a large stake to its nonprofit overseer likely aims to codify OpenAI’s mission-first guardrails at the ownership level. By placing substantial economic interest or control with the nonprofit, OpenAI can better align incentives around safety, long-term research priorities, and public benefit, rather than near-term growth alone. The tentative deal with Microsoft meanwhile secures continued access to capital, cloud capacity, and go-to-market reach, reinforcing the commercial backbone needed to sustain frontier model development.

Governance and Structure

Mission alignment: Elevating the nonprofit’s stake could tighten the link between governance and the organization’s stated public-interest goals, reducing the risk of mission drift as product lines and revenue scale.

Checks and balances: A larger nonprofit stake may introduce stronger checks on commercialization decisions, including deployment timelines, model releases, and safety thresholds, while still enabling pragmatic collaboration with a strategic investor.

Investor relations: Existing investors and employees may see changes to cap tables, economics, or voting rights depending on how the transfer is structured; “tentative” suggests details remain under negotiation.

Implications for Stakeholders

For Microsoft: The deepened partnership could secure priority access to OpenAI’s research and product pipeline, fortify Azure’s AI moat, and create tighter integration across developer tools and enterprise offerings—while also navigating a governance framework that elevates nonprofit oversight.

For regulators: A reorganization that concentrates influence in a nonprofit may draw scrutiny, but it can also be framed as a pro‑safety move. Competition authorities will watch how technical and distribution advantages flow between OpenAI and Microsoft, especially if exclusivities are extended.

For the AI ecosystem: If successful, this hybrid model—mission-centric control with strategic corporate partnership—could become a template for other frontier labs seeking both scale and guardrails. It may also intensify the race to secure long-term cloud, chip, and capital commitments.

Operational Considerations

Execution risk: Translating “tentative” into finalized agreements requires resolving valuation mechanics, rights, and fiduciary responsibilities between nonprofit and for‑profit entities.

Talent and incentives: The structure must still attract and retain top researchers and engineers; compensation, liquidity pathways, and governance clarity will be essential.

Safety and compliance: With nonprofit control expanded, expect more formalized safety milestones, auditing, and external assurance frameworks around model release and usage.

Key Takeaways

OpenAI says it has a “tentative deal with Microsoft” and plans to give “a $100 billion stake” to its nonprofit manager.

The moves signal a major reorganization intended to align ownership with mission while preserving strategic scale and resources.

Success hinges on final deal terms, regulatory response, and the company’s ability to balance frontier innovation with robust safety governance.

OpenAI Says Spending to Rise to $115B Through 2029: Information

Bloomberg • September 5, 2025

AI•Funding•OpenAI

OpenAI Inc. told investors it projects its spending through 2029 may rise to $115 billion, about $80 billion more than previously expected, The Information reported, without providing details on how and when shareholders were informed.

OpenAI is in the process of developing its own data center server chips and facilities to drive the technologies, in an effort to control cloud server rental expenses, according to the report.

ElevenLabs CEO/Co-Founder, Mati Staniszewski:The Untold Story of Europe’s Fastest Growing AI Startup

Youtube • 20VC with Harry Stebbings • September 8, 2025

AI•Tech•ElevenLabs•MatiStaniszewski

ASML to Invest $1.5 Billion in Mistral, the French A.I. Start-Up

Nytimes • September 9, 2025

AI•Funding•ASML•Mistral•Investment

Overview

ASML, a Dutch maker of semiconductor equipment, plans to invest about $1.5 billion in Mistral, a French artificial intelligence start-up. In the article’s core line, “ASML, the Dutch maker of semiconductor equipment, is investing about $1.5 billion in Mistral, the French A.I. start-up.” This single move links one of the world’s most consequential chip equipment companies with a fast-growing European A.I. developer, signaling intensified alignment between compute infrastructure and model innovation across Europe.

Deal Snapshot

Parties: ASML (investor) and Mistral (recipient)

Sector link: Semiconductor equipment meets artificial intelligence software

Scale: “About $1.5 billion,” indicating a multi-billion-dollar commitment

Geography: Cross-border within Europe (Netherlands to France)

Why It Matters

Capital intensity in A.I.: The investment acknowledges that cutting-edge A.I. development demands substantial capital for training, deployment, and scaling. A $1.5 billion commitment underscores the scale required to remain competitive in frontier model development and enterprise rollout.

Hardware–software alignment: A strategic tie-up between a chip-tool maker and a model developer could create tighter feedback loops between model requirements and the systems that manufacture the chips those models run on. Even without disclosed terms, the basic structure suggests a bid for more co-optimization across the stack.

European technology ambitions: A large intra-European investment points to efforts to strengthen the region’s A.I. ecosystem. By pairing a European industrial champion with a European A.I. lab, the move could help concentrate know-how, talent, and deployment within the region.

Potential Strategic Rationale

Demand signaling: The investment may reflect confidence in long-term A.I. compute demand, as model builders require sustained access to advanced chips and efficient training and inference pathways.

Product and roadmap insights: Closer proximity to an active A.I. lab can provide early insight into emerging workload patterns, informing how future systems, processes, or manufacturing priorities might evolve.

Ecosystem building: Backing a high-profile start-up can catalyze partnerships with cloud providers, enterprises, and research institutions, forming a broader European A.I. network around shared goals.

Implications for Stakeholders

For enterprise adopters: Greater resources behind a European A.I. start-up may translate into faster product cycles, more robust support, and clearer roadmaps for safely deploying generative and predictive A.I. in regulated industries.

For developers and researchers: The capital can expand compute access, talent hiring, and research throughput, potentially accelerating progress in model capabilities, tooling, and evaluation frameworks.

For policy and industry groups: A prominent intra-European investment can serve as a reference point for industrial policy discussions focused on competitiveness, resilience, and technological sovereignty.

What to Watch Next

Deployment focus: Whether the funding translates into targeted industry solutions (e.g., verticalized A.I. offerings) or primarily fuels foundational model development.

Partnership contours: Any follow-on collaborations around data centers, compute procurement, or co-engineering relationships that align model needs with future silicon and systems.

Market response: How peers—both in chip supply chains and among A.I. start-ups—adjust strategies, including potential new alliances, funding rounds, or joint research initiatives.

Key Takeaways

A landmark cross-sector investment connects a semiconductor equipment leader with a European A.I. start-up.

The “about $1.5 billion” scale highlights the capital requirements of modern A.I. and the strategic value of aligning hardware and software.

The move signals confidence in long-term A.I. demand and may strengthen Europe’s role in the global A.I. race.

Watch for downstream partnerships, product acceleration, and ecosystem effects as the investment unfolds.

ASML and Mistral agree €1.3bn blockbuster European AI deal

Ft • September 8, 2025

AI•Funding•ASML•Mistral•USChinaTensions

Overview

ASML, the Dutch semiconductor equipment leader, has struck a €1.3bn agreement with Mistral, the French artificial intelligence company, in a move framed as a strategic European response to intensifying US‑China tech rivalries. The deal positions a core hardware gatekeeper of the global chip supply chain alongside one of Europe’s fastest‑rising AI model developers, signaling a bid to consolidate regional capabilities across compute, hardware, and foundational model development. The article characterizes Mistral as a national “AI champion,” and notes that escalating geopolitical tensions are reshaping corporate and government priorities across the industry. The reported value—€1.3bn—underscores an unusually large, Europe‑focused AI transaction, suggesting long‑term financial backing and collaboration rather than a narrow, short‑term contract.

What the deal signals

Scale and intent: The €1.3bn figure indicates a multi‑year commitment that likely spans R&D, infrastructure, and deployment support, rather than a singular investment round or procurement agreement.

European capacity building: By pairing ASML’s central role in advanced lithography with Mistral’s model‑building capabilities, the partnership aims to deepen Europe’s self‑reliance in the AI stack—from compute access to applied model integration.

Strategic endorsement: The “backs” framing suggests ASML is not merely a customer; it is endorsing and enabling a home‑grown foundation model player, elevating Mistral’s position in enterprise and public‑sector markets.

Geopolitical hedging: As export controls and supply constraints reshape chip flows, aligning a European equipment leader with a European model developer reduces exposure to external chokepoints and policy whiplash.

Context: US‑China tensions and supply chain realities

Great‑power competition has made access to cutting‑edge compute and manufacturing tools a policy lever. ASML’s tools are already central to global advanced chipmaking; closer ties with a European AI developer provide a regional counterweight while navigating an increasingly fragmented regulatory landscape.

For AI companies, the most durable competitive advantages are shifting toward secure compute availability, dependable hardware roadmaps, and trusted data pipelines—areas where cross‑border European partnerships can smooth procurement and compliance.

Potential implications

For European AI: Expect accelerated model training cycles, more predictable compute access, and stronger commercialization pipelines in sectors like government, defense‑adjacent industries, and regulated enterprise verticals.

For competitors: The deal could pressure US hyperscalers and Chinese model labs by demonstrating that Europe can mobilize large‑scale capital and industrial champions to support local AI leaders.

For policy alignment: It dovetails with Europe’s push for digital sovereignty, data protection, and trustworthy AI, potentially creating reference deployments that meet stringent European regulatory expectations.

For supply chains: Tighter coupling between upstream equipment and downstream AI applications may lead to co‑designed solutions that optimize model performance per watt and per euro—crucial as compute costs rise.

Key takeaways

A rare, Europe‑centric AI transaction of €1.3bn elevates Mistral and signals ASML’s strategic role beyond equipment supply.

The partnership is both industrial and geopolitical—aimed at resilience, autonomy, and competitiveness amid US‑China tensions.

Expect faster productization, stronger enterprise adoption, and a template for future alliances linking European hardware and AI model capabilities.

The AI Agent Reality Check: Why Managing Always-On AI Workers Is Your Next Big Challenge

Saastr • Jason Lemkin • September 8, 2025

AI•Work•AIAgents•Automation•SaaS

The brutal truth about AI agents that nobody talks about online and at conferences.

I’ve been running SaaStr itself and B2B companies for over two decades. I’ve managed teams across time zones, dealt with burnout, navigated the remote work revolution, and thought I’d seen every workforce challenge imaginable.

I was wrong.

After deploying 11 true AI agents across SaaStr operations, I’ve discovered the most counterintuitive management challenge of our generation: It’s not about making humans work harder. It’s about keeping up with workers who never stop.

The 996 Fallacy vs. The AI Reality

For years, Silicon Valley has debated work-life balance. The infamous “996” culture (9am-9pm, 6 days a week) became a symbol of unsustainable hustle culture during the 2020-2022 era. We fought for better boundaries, more reasonable hours, and human-centered workplaces. It then became a symbol of the AI Era the past 12-18 months.

Now as AI agents have arrived, and suddenly we’re dealing with something entirely different: workers who operate 24/7/365 without breaks, vacations, or sleep.

Your AI agents don’t just work 996. They work 996++. They’re processing data at 3 AM. They’re optimizing campaigns on weekends. They’re analyzing customer feedback during your family dinner. They never stop, never tire, never ask for time off.

This isn’t a feature—it’s your biggest operational challenge.

OpenAI Backs AI-Made Animated Feature Film

Wsj • Jessica Toonkel • September 7, 2025

AI•Funding•OpenAI•Critterz•Cannes

The film, called “Critterz,” aims to debut at the Cannes Film Festival and will leverage the startup’s AI tools and resources.

OpenAI is backing production of the AI-assisted animated feature, providing software and computing power to help accelerate the filmmaking process. The project is led by Chad Nelson, a creative specialist at the company, and expands on a 2023 short developed with OpenAI image-generation tools. The feature-length version is being produced in collaboration with UK-based Vertigo Films and Los Angeles studio Native Foreign.

“Critterz” follows a community of forest creatures whose world is upended by the arrival of an unexpected stranger. The filmmakers plan to complete the movie on a timeline of about nine months—far faster than conventional animated features—while keeping the budget under $30 million. Human voice actors and artists remain central to the production, with AI used to iterate on concept art, environments, and certain animation workflows.

The team is exploring a profit-sharing model across a roughly 30-person crew and aims for a global theatrical release in 2026. While the film does not yet have a distribution partner, the Cannes premiere target is intended to showcase how generative tools can reduce costs and speed up production without replacing human creativity. The effort comes amid ongoing industry debates about copyright, labor concerns, and the appropriate role of AI in entertainment.

The AI Browser Wars

Tanayj • Tanay Jaipuria • September 9, 2025

AI•Tech•Browser Wars•Chrome•Perplexity Comet

Hi friends,

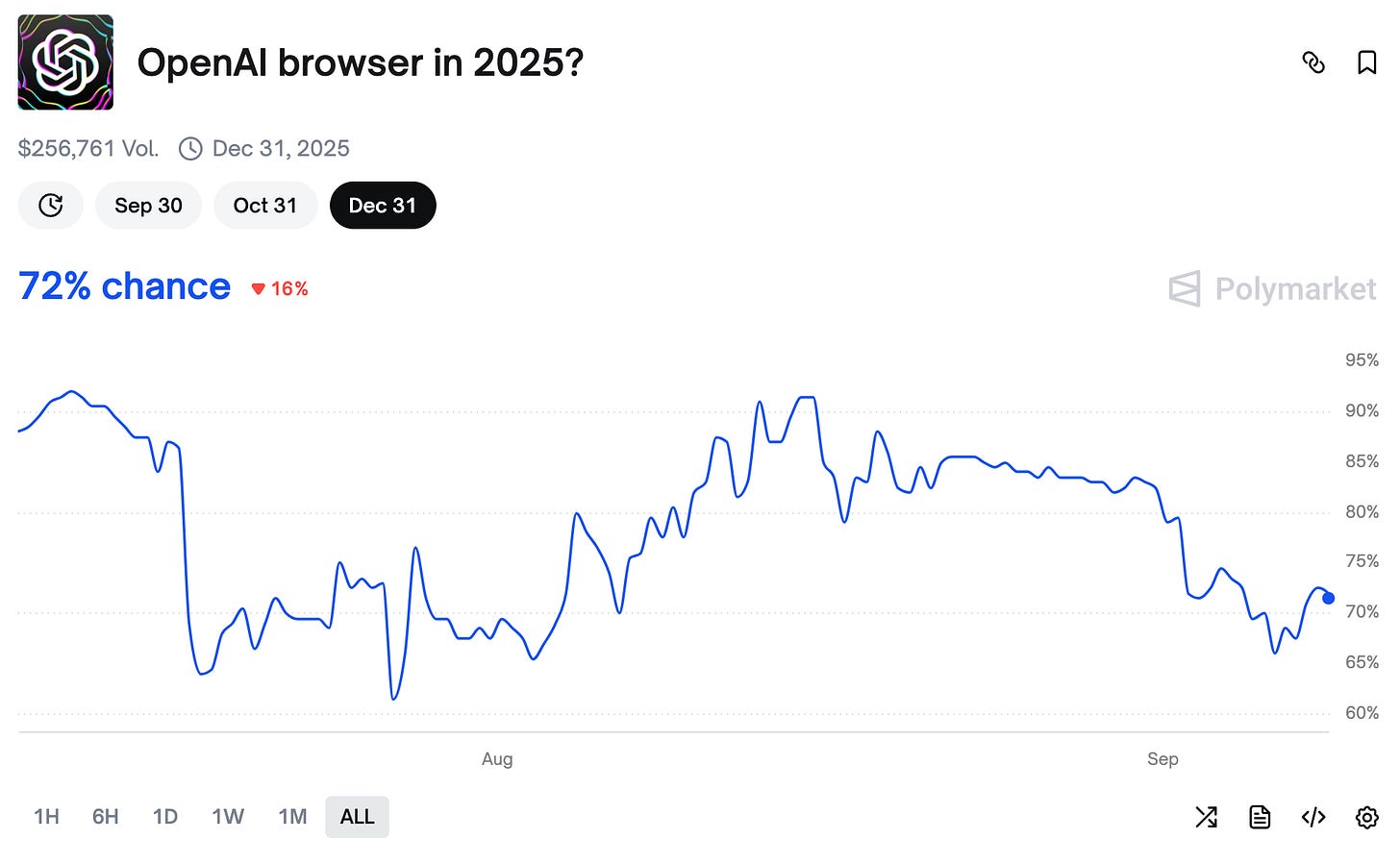

A lot is happening in AI browser land, so let’s talk about it. Just this past week, Atlassian agreed to buy The Browser Company, the makers of Arc and Dia for $610M in cash and a federal judge declined to force Google to sell Chrome, which keeps the incumbent’s distribution power intact. Perplexity continues to roll out Comet more broadly. OpenAI is widely reported to be prepping a browser of its own.

Let’s jump in and discuss:

Why the browser matters

Who the major AI browser contenders are

Why the browser matters for AI

I expect the browser to be an interesting battleground going forward for the following reasons.

Distribution surface: Default slots in browsers are worth billions. Google paid Apple about $20B in 2022 to be Safari’s default search on iOS, and spent $26.3B in 2021 across devices and platforms to secure default status. A recent ruling lets Google keep paying partners for defaults, which preserves that distribution engine. Mozilla’s finances also show how powerful this channel is, with a large majority of revenue tied to its Google search deal. Search was the original beneficiary of these defaults, and chat assistants are now stepping into the same front door.

Place where agents happen: The browser already sits where work happens, so it is a natural potential control plane for agents. You can grant page context, watch steps, and take over when needed. That is why new products position the browser as an agent desk. Perplexity’s Comet launched as an agentic browser that researches and acts from the page. The Browser Company’s Dia makes it easy to use AI skills and assistants using the context of a webpage. Together, these moves show agents gravitating to the browser surface first, particularly as human in the loop happens. The fact that the browser also holds identity, cookies and app state across various apps and tools people use also further helps further this idea.

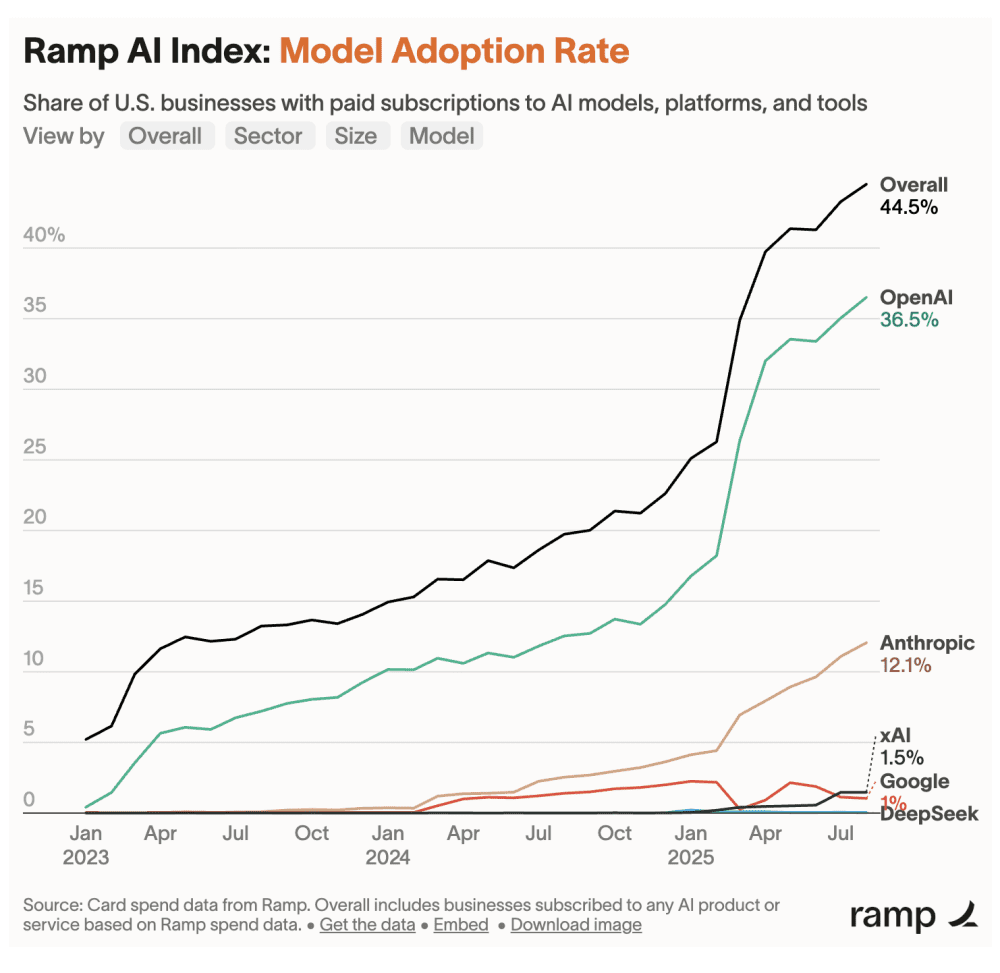

OpenAI vs. Anthropic: Ramp Data Shows 36% vs. 12% Penetration, But Velocity Curves Tell a Different Story

Saastr • Jason Lemkin • September 11, 2025

AI•Data•OpenAI

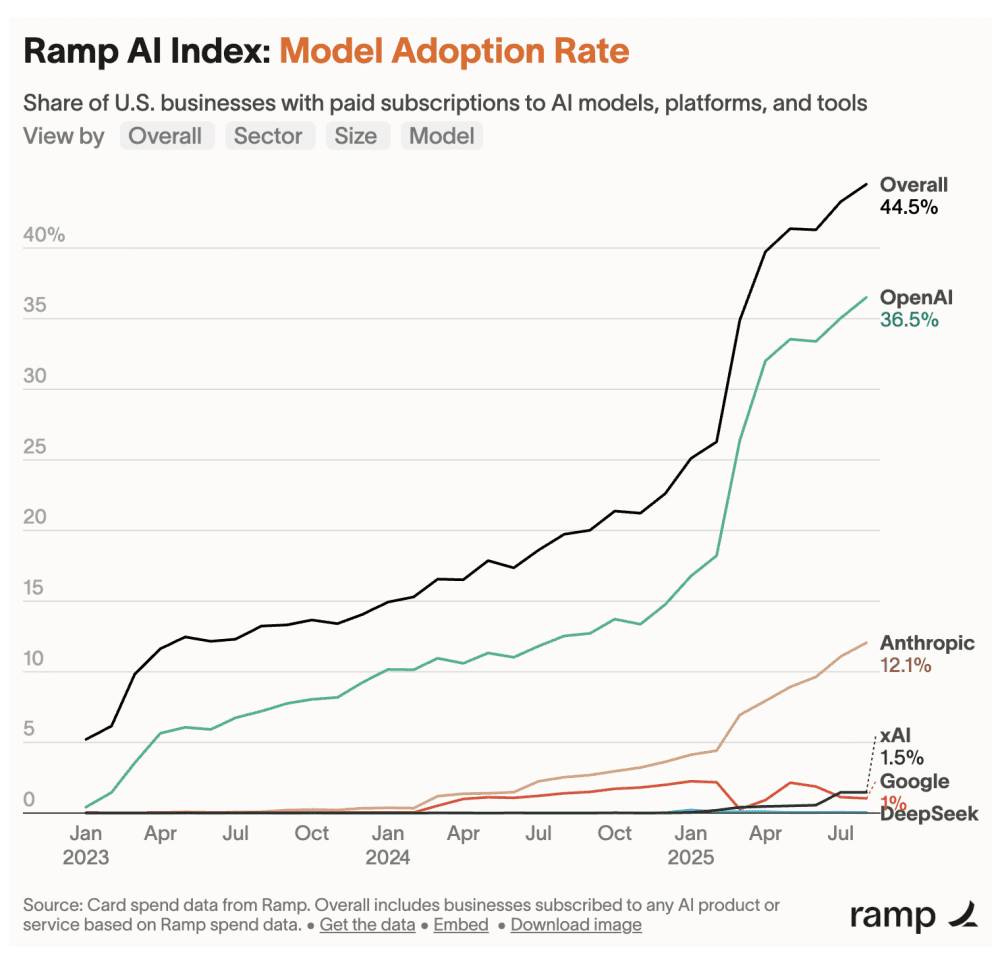

Ramp just released its latest data on LLM Model Spend data and it shows about what you’d expect … but it also highlights the strong momentum with Anthropic. Ramp itself has crossed a stunning $1B ARR and manages billions and billions of spend:

Summary & 2026-2027 Projections:

Current landscape: OpenAI leads at 36.5%, Anthropic accelerating at 12.1%, with 44.5% overall business adoption

Key insight: This reflects discrete AI purchases, not enterprise contract spending—Google’s 1% severely understates actual usage

2026 projection: Anthropic likely reaches 20-25% of business wallets, OpenAI plateaus around 40-45%, overall adoption hits 70-80%

Strategic implication: Credit card data captures the “prosumer enterprise” segment—critical for go-to-market but incomplete for total market assessment

Bottom Line Up Front: OpenAI dominates discrete AI purchases at 36.5% adoption among U.S. businesses, but this credit card spending data tells only part of the enterprise AI story—and reveals a massive blind spot in how we measure AI market share.

The latest data from Ramp Economics Lab just dropped a bombshell on the AI industry’s favorite parlor game: who’s really winning the LLM wars? Based on business credit card spend data from U.S. businesses with paid AI subscriptions, we’re finally getting real market insight beyond the hype cycles and PR announcements. But there’s a crucial caveat every SaaS leader needs to understand.

The Critical Data Limitation: Credit Cards vs. Enterprise Contracts

This Ramp data tracks business credit card transactions for AI subscriptions—which means we’re seeing discrete AI tool purchases, not massive enterprise API contracts or bundled cloud spending. That distinction completely changes how we interpret these numbers:

What’s Actually Being Measured: SMBs buying ChatGPT Team, mid-market companies expensing Claude Pro, startups purchasing API credits on corporate cards. This is the “prosumer enterprise” segment—businesses making tactical AI purchases rather than strategic platform commitments.

What’s Missing in Data: Google’s 1% market share of Ramp spend suddenly makes sense. Most Google AI usage happens through existing Cloud Platform contracts, Workspace bundles, or massive enterprise deals that never touch a corporate credit card. Same logic applies to Microsoft/Azure OpenAI deployments and AWS Bedrock usage.

The Current Leaderboard: 44.5% Overall Adoption in the Visible Market

Looking at the share of U.S. businesses with paid credit card subscriptions to AI models, platforms, and tools:

Overall AI Adoption: 44.5% – Businesses making discrete AI purchases (the visible iceberg tip)

OpenAI: 36.5% – Dominates direct-pay subscriptions but this understates enterprise API usage

Anthropic: 12.1% – Strong in subscription model, likely reflecting their direct-pay positioning

xAI: 1.5% – Limited enterprise traction despite Musk’s platform, but quickly up from basically 0%

Google: 1% – Severely understated due to bundled/contract spending patterns

DeepSeek: <1% – Minimal commercial adoption despite open-source buzz

OpenAI Signs $300 Billion Data Center Pact With Tech Giant Oracle

Nytimes • September 10, 2025

AI•Funding•DataCenters•Oracle•OpenAI

Overview

OpenAI has entered a sweeping data center arrangement with Oracle that is described as a $300 billion pact to accelerate construction of U.S.-based A.I. infrastructure. According to the report, the funding “covers more than half of the A.I. data centers that OpenAI plans to build in the U.S. over the next several years,” signaling a multi-year, nationwide buildout of high-performance computing capacity. This scale points to an aggressive roadmap for expanding training and inference resources to meet surging demand for advanced models and enterprise deployments.

Scale and financing

The headline figure underscores two related realities: frontier A.I. now requires industrial-scale capital, and the bottleneck has shifted from algorithms to power, land, chips, and buildings. A $300 billion commitment, if deployed over several years, would rival or exceed the capex of entire hyperscale clouds in prior cycles. The detail that the funding covers “more than half” of OpenAI’s planned U.S. sites implies a portfolio approach—multiple campuses across states with favorable power prices, land availability, and streamlined permitting. Oracle’s participation suggests a blend of cloud services, financing structures, and potentially long-term capacity reservations tailored to A.I. workloads.

Infrastructure and siting considerations

Delivering this footprint will hinge on four constraints:

Power availability and grid interconnects: Large A.I. campuses can require gigawatts of capacity, driving co-development with utilities, substation builds, and long-lead transmission upgrades.

Cooling and water: Heat density from advanced accelerators pushes operators toward liquid cooling and, in some regions, reclaimed water or air-cooled designs to minimize freshwater draw.

Supply chains: Securing accelerators, networking gear, and transformers at scale requires multi-year procurement and close coordination with chip and power-equipment makers.

Speed-to-market: Modular designs, standardized data hall blocks, and pre-approved site plans will be critical to meet “over the next several years” timelines.

Strategic motives and division of roles

For OpenAI, the arrangement concentrates capital and execution risk with a partner equipped to finance, build, and operate cloud-scale facilities. For Oracle, it positions the company as a central enabler of A.I. compute capacity, potentially deepening its role in training and serving state-of-the-art models while expanding enterprise offerings. The structure likely blends dedicated capacity for OpenAI with cloud-region expansion that benefits Oracle’s broader customer base, aligning incentives across utilization, efficiency, and uptime.

Economic and policy implications

A program that covers more than half of OpenAI’s planned U.S. data centers will ripple across labor markets, local tax bases, and state-level industrial strategies. Expect intensifying competition among states for sites, with incentives tied to jobs, grid upgrades, and clean-energy procurement. At the federal level, the concentration of critical A.I. infrastructure may draw scrutiny related to competition, supply-chain security, and energy policy. The buildout could accelerate adoption of renewable power purchase agreements, spur investments in battery storage and firming resources, and catalyze innovation in heat reuse, microgrids, and demand-response.

Risks and execution challenges

Key risks include delays in utility interconnection, scarcity of specialized equipment (notably transformers and high-speed networking), and the pace of accelerator deliveries. Cost escalation—from construction materials to energy prices—could pressure budgets and timelines. Community concerns over water usage and land development may require additional mitigations and transparency. Finally, the rapid cadence of A.I. model evolution raises the risk of technological obsolescence; designs must remain adaptable to new architectures and higher power densities.

Key takeaways

Reported pact size: $300 billion, aimed at accelerating OpenAI’s U.S. A.I. data center expansion.

Coverage: “More than half” of OpenAI’s planned U.S. sites over “the next several years,” indicating a multi-campus, multi-year program.

Strategic fit: Pairs OpenAI’s compute needs with Oracle’s financing, cloud operations, and data center delivery capabilities.

Broader impact: Significant implications for U.S. energy grids, supply chains, and state-level economic development, alongside potential regulatory attention.

Execution focus: Power procurement, cooling innovation, supply-chain resilience, and modular construction will determine speed and cost-efficiency.

Media

Techmeme is Turning Twenty

Pxlnv • Nick Heer • September 9, 2025

Media•Journalism•Techmeme•GabeRivera•NewsAggregation

Fred Vogelstein, of Crazy Stupid Tech, interviewed Techmeme founder Gabe Rivera on the occasion of its forthcoming twentieth anniversary:

But Techmeme looks and works exactly the same way as it always has. And it has never been more popular. Traffic is up 25 percent this year, likely driven by the explosion of interest in AI, Rivera says.

[…]

Now the software finds and ranks the stories. But the editors write the headlines. When stories are generated by corporate press releases/announcements, they choose which media outlet’s story is driving the most interesting social media conversations. The software also chews on the API feeds from the big social networks to come up with a list of the most useful conversations. Editors approve all those, however, to prevent duplication.

Since I learned about Techmeme in the late 2000s or early 2010s, I have admired many of its attributes. Its barely-changing design speaks to me, especially with its excellent use of Optima. More than anything, however, is its signature way of clustering related stories and conversations that keeps me coming back. That feature was one of the sources of inspiration for this very website. The differing perspectives are useful beyond a single story or topic; it has been a source of discovery for other websites and writers I should keep an eye on.

The steadiness of the site masks some of the changes that have been made over the years, however. Not too long ago, the community discussion section of any topic was merely a list of tweets. However, since about 2023, I think, it has also incorporated posts from other social networks and message boards. This is a welcome improvement.

Silicon Valley trends may come and go, but I hope Techmeme continues to evolve at its present geological pace.

Apple Introduces iPhone 17, iPhone Air and Updated AirPods at Annual Event

Nytimes • Tripp Mickle and Brian X. Chen • September 9, 2025

AI•Tech•iPhone

As inflation has risen, some businesses have flourished by selling more for less. But with its newest iPhone, Apple is taking the opposite approach and offering less for more.

On Tuesday, the company unveiled a new iPhone that is thinner and smaller than previous models. The titanium-encased smartphone is a third less thick than previous models but has fewer cameras and less battery life than its predecessors. It is called the iPhone Air and costs $999.

The single camera has a second telephoto lens and uses artificial intelligence to sharpen images, allowing it to offer the same photography features as previous models, Apple said. It also has software to conserve battery, so that it can offer all-day battery life.

“It’s a paradox you have to hold to believe,” said Abidur Chowdhury, an industrial designer who introduced the device during an hourlong product event from the company’s headquarters in Cupertino, Calif.

The device headlined an event that was punctuated by announcements of price increases for some of its most popular iPhones, with the cost of the Pro and Pro Max models rising by $100, to $1,099 and $1,199.

And in a move that increases profit margins with the iPhone 17 Pro for Apple, the company replaced the titanium material found on previous Pro phones with aluminum, a cheaper material. In a marketing video, Apple said it had to revert to aluminum because it redesigned the phone’s hardware.

Apple’s creator-centric iPhone 17 Pro will make the vlogging camera obsolete

Techcrunch • Amanda Silberling • September 9, 2025

Media•Social

Apple unveiled its new line of iPhones on Tuesday, and the iPhone 17 Pro is making a direct appeal to content creators.

For years, the iPhone camera has met the needs of most casual users, effectively replacing consumer point-and-shoots. But millions of creators — an audience estimated in the hundreds of millions — have still relied on dedicated handheld video cameras from Canon, Sony, Panasonic, Nikon and Fujifilm.

Those companies now sell entire “vlogging camera” lines with flip-out screens and social-friendly formats. The iPhone 17 Pro, however, is positioned to be the device that finally sidelines those extra cameras.

A major change is the 17 Pro’s camera sensor, which is 56% larger than the iPhone 16 Pro’s. A bigger sensor boosts low‑light performance, depth of field control and overall image quality, so the baseline technical capabilities jump significantly.

Even under closer scrutiny, the package stands out for a half‑pound device. The main, ultrawide and telephoto modules are all 48‑megapixel fusion cameras, enabling optical zoom steps at 0.5x, 1x, 2x, 4x and 8x. The telephoto moves up from 12 MP on the 16 Pro, and the front‑facing camera increases from 12 MP to 18 MP.

Apple’s camera architecture lead emphasized that wider fields of view at higher resolution particularly help when filming yourself, reinforcing the pitch that the Pro models are built for creators.

Video is the real focus. Like its predecessor, the iPhone 17 Pro records 4K at 120 fps in Dolby Vision, but new creator‑centric tools differentiate it further. Dual front‑and‑back camera recording arrives across the iPhone 17 lineup, and the front camera supports Center Stage framing in both horizontal and vertical without rotating the phone. On the Pro, ultra‑stabilized 4K at 60 fps targets creators shooting on the move.

For multi‑camera workflows, the 17 Pro adds Genlock support so multiple cameras can sync cleanly, with an API for developers to build custom rigs.

Alongside the hardware, Apple is releasing Final Cut Camera 2.0, a free upgrade that brings more pro‑level control. It enables shooting in ProRes RAW for faster exports and smaller files without quality trade‑offs, and introduces open‑gate recording that uses the full sensor for flexible reframing, stabilization and aspect‑ratio decisions after the fact.

The iPhone still has to be more than a camera, but for many creators, consolidating into a single device is compelling on its own.

Apple Debuts AirPods Pro 3 With Heart-Rate Monitor, Better Fit

Bloomberg • September 9, 2025

Media•Journalism•Apple•AirPodsPro

Apple Inc. introduced its first new AirPods Pro model in three years, adding new health-tracking features, improved noise cancellation and a better fit.

Apple said the $249 earbuds add a heart-rate sensor and boost music playback to eight hours.

Google Says the Open Web Is ‘in Rapid Decline’, But Insists It Means in an Advertising Sense

Pxlnv • September 8, 2025

Media•Advertising•Google

Barry Schwartz, Search Engine Roundtable:

“Google’s CEO, Sundar Pichai, said in May that web publishing is not dying. Nick Fox, VP of Search at Google, said in May that the web is thriving. But in a court document filed by Google on late Friday, Google’s lawyers wrote, ‘The fact is that today, the open web is already in rapid decline.’ This document can be found over here (PDF) and on the top of page five, it says:”

“The fact is that today, the open web is already in rapid decline and Plaintiffs’ divestiture proposal would only accelerate that decline, harming publishers who currently rely on open-web display advertising revenue.”

This is, perhaps, just an admission of what people already know or fear. It is a striking admission from Google, however, and appears to contradict the company’s public statements.

Dan Taylor, Google’s vice president of global ads, responded on X:

“Barry – in the preceding sentence, it’s clear that Google’s referring to ‘open-web display advertising’ – not the open web as a whole. As you know, ad budgets follow where users spend time and marketers see results, increasingly in places like Connected TV, Retail Media & more.”

Taylor’s argument appears to be that users and time are going to places other than the open web and so, too, is advertising spending. Is that still supposed to mean the open web is thriving?

Robinhood finally wins spot in S&P 500

Youtube • CNBC Television • September 5, 2025

Media•Broadcasting•S

What Happened and Why It Matters

The segment explains that Robinhood has been added to the S&P 500, a milestone that signals the company now meets the index committee’s core criteria around size, liquidity, float, and consistent profitability.

Anchors and guests frame the inclusion as both a validation of Robinhood’s maturation from a high-growth fintech to an established public company and a catalyst that could reshape trading dynamics in its shares.

Index Mechanics and Flow Implications

Inclusion typically prompts mechanical buying from passive index funds and closet indexers that track the S&P 500, potentially creating near‑term upward demand for the stock and elevated volumes around the effective date.

The discussion highlights common patterns around index additions: pre‑rebalance run‑ups, elevated intraday volatility during the closing auction on the effective day, and normalization afterward as active managers rebalance.

Market makers and options desks may see wider activity as hedging demand increases; liquidity generally improves, though bid‑ask dynamics can be choppy through the transition window.

Signals About Robinhood’s Fundamentals

Commentators link the decision to Robinhood’s progress toward sustained GAAP profitability, stronger operating metrics, and an expanded product set (including brokerage, crypto trading, and higher‑yield cash and debit products).

The panel notes that S&P inclusion often coincides with management’s improved cost discipline, clearer revenue visibility, and a broadened investor base beyond momentum traders to long‑only mutual funds and pensions.

Valuation, Volatility, and Ownership Mix

The segment emphasizes that while index‑driven buying can lift the stock in the short term, long‑term performance still depends on earnings quality, user engagement, net interest income durability, and diversification beyond trading‑sensitive revenues.

Ownership composition tends to shift after inclusion: a higher share of passive holders can dampen idiosyncratic swings over time, even as options activity remains elevated given Robinhood’s active retail profile.

Analysts on the program weigh the prospect of a “valuation premium” that some S&P constituents enjoy due to broader coverage and deeper pools of capital, balanced against the risk that multiple expansion may prove temporary if growth stalls.

Strategic and Competitive Context

Speakers situate Robinhood’s move within the broader brokerage landscape, noting increased competition on pricing, yield, and product breadth from incumbents and fintech peers.

Key differentiators discussed include mobile‑first design, rapid product iteration, and brand reach among younger investors—contrasted with regulatory scrutiny over practices like payment for order flow and the need for robust risk controls.

Trading Calendar and Market Microstructure Notes

Expect heavier volumes into the index’s implementation window and a potentially outsized closing cross as index funds complete buys.

Liquidity providers may adjust inventories ahead of time; any imbalance could result in sharp end‑of‑day prints on the effective session, followed by tighter spreads as the new equilibrium sets in.

Risks and Watch Items

Regulatory developments affecting retail trading, crypto market cycles, and interest‑rate sensitivity of net interest income remain central variables.

Retention and monetization of active users, expansion into advisory and retirement products, and execution quality are flagged as levers that can sustain post‑inclusion momentum.

Key Takeaways

S&P 500 inclusion validates Robinhood’s scale and profitability progress and broadens its investor base.

Short‑term flows and volatility are likely around the rebalance, but durable returns depend on fundamentals and product diversification.

Competitive pressures and regulation remain the primary overhangs; improved liquidity and coverage are tangible benefits of joining the index.

OpenAI Backs AI-Made Animated Feature Film

Wsj • Jessica Toonkel • September 7, 2025

Media•Film•OpenAI•GenerativeAI•Cannes

Overview

A forthcoming animated feature, “Critterz,” is being developed with generative AI and is targeting a debut at the Cannes Film Festival. The project is backed by OpenAI and will draw on a startup’s AI tools and resources to drive core elements of production. The premise signals a push to move AI-assisted content beyond shorts and experimental clips into full-length, festival‑grade filmmaking, positioning the team to test whether audiences and gatekeepers will embrace an AI-made feature on one of the world’s most visible stages. The Cannes ambition underscores confidence in the film’s artistic quality and commercial potential, while also serving as a high-profile proof of concept for AI-native production pipelines.

What this suggests about the production model

The creative workflow likely blends human direction with AI systems for storyboarding, asset generation, animation, and postproduction polish.

Using a startup’s proprietary tools indicates a vertically integrated approach, where model selection, tooling, and compute are tuned to animation-specific needs rather than generic text-to-image/video outputs.

An AI-first pipeline could compress timelines for iterating on character designs, environments, and scenes, and may shift budget from large animation teams to model training, inference, and specialized prompt/rig control.

Strategic motivations

For OpenAI, supporting an AI-generated feature advances a showcase strategy: demonstrating that frontier models are not just capable of short clips but can sustain narrative coherence, visual consistency, and character continuity over feature length.

A Cannes bid helps frame the project as cinema rather than tech demo, courting critics, distributors, and streamers that increasingly scout festivals for distinctive IP.

For the startup, association with a marquee backer and a top-tier festival can accelerate business development with studios, tool licensing, and partnerships across VFX and animation houses.

Implications for the industry

If “Critterz” secures a Cannes slot and favorable reception, it could validate AI-driven animation as a viable tier in the production stack, prompting studios to adopt hybrid workflows (AI previsualization and layout; human-led story, direction, and final polish).

Tooling that reliably preserves character identity, lip sync, and motion continuity over long runtimes would mark a step-change from the variability common in short-form AI generation, potentially reducing reliance on extensive keyframing and rotoscoping.

Distribution stakeholders will watch for audience tolerance of AI-generated aesthetics, the film’s emotional resonance, and whether the pipeline can deliver at competitive cost without sacrificing quality.

Risks and open questions

Artistic consistency over 80–120 minutes remains a technical hurdle; drift in style, physics, or facial continuity can break immersion.

Rights, credits, and labor considerations will be scrutinized, including how human contributors are acknowledged and how training data provenance is handled.

Festival programmers may weigh innovation against cinematic merit; novelty alone won’t secure selection or distribution.

What to watch next